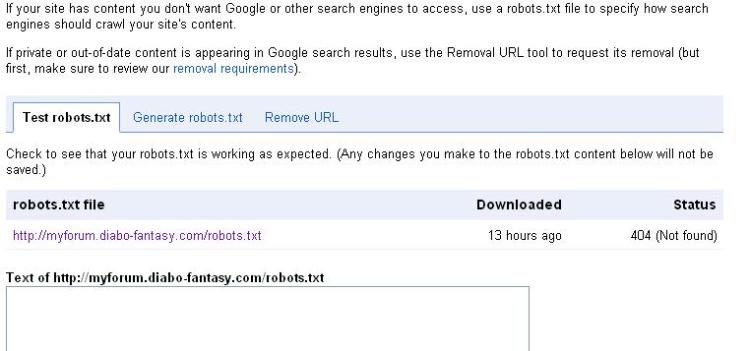

robots.txt Not Found Erorr404

|

http://myforum.diabo-fantasy.com/robots.txt

robots.txt Not Found Please contact Nabble Support if you need help. |

Re: robots.txt Not Found Erorr404

|

Nabble has a static robots.txt file, but it has little value to app owners because NAML allows you to change URLs and build new pages that wouldn't be covered by our static file. At this point, we prefer to guide bots with meta tags and other HTML attributes, but building a custom robots.txt file with NAML should be possible as well.

|

|

In reply to this post by MettallicA

how to make custom robots.txt file with NAML?

|

|

I need this too because I would like to have a Customer Only forum where I could post things that would not be for general circulation. I can restrict users with permissions, but that won't be any good if Google can access private information and paste it all over their SERPs...

Anne

|

Re: robots.txt Not Found Erorr404

|

Hi Anne, Google can't crawl private forums, so there is no need to worry about the robots.txt file in this case. So, before I explain how to change this, I would like to hear a real need for a custom robots.txt file. MettallicA, do you mind explaning?

|

|

Hugo... But I have selected to give search engine access overall, but want 1 forum without them.

Anne

|

Re: robots.txt Not Found Erorr404

|

If that specific forum is private, then google simply can't crawl it. There is just no way for google to access those topics and messages.

|

|

In reply to this post by Hugo <Nabble>

i need custom robots.txt for Webmaster Tools - Google

|

|

This post was updated on .

In reply to this post by Hugo <Nabble>

Sorry... I'm lost... I have set up Anyone to be able to read, and Members able to post, but I would like Customers, and only Customers, to able to get free digital things via the forum... which I believe I can do with Permissions. EDIT: No... that won't work, will it? I wiil need a 'separate section'...  Oh... Is Private achieved by using Login? So... if Google can't access my forum, despite me selecting to allow search engines, then meta tags and keywords aren't going to be much help either... apart from with the likes of Facebook comments which may be picked up by Google. Arrrh... What about my Blog? Is that accessible by Google?

Anne

|

Re: robots.txt Not Found Erorr404

That was Hugo's point. Google can't access anywhere that needs a logon. Its spiders can't capable of entering a username/password. By default, it will be able to access and analyse any page that an unregistered user can access. A robots.txt file with the appropriate line in it will stop it.

Volunteer Helper - but recommending that users move off the platform!

Once the admin for GregHelp now deleted. |

|

I can't understand... this seems sooooo simple to me...

I want most of my forum to be accessible to Google ... but this isn't possible because my users have to login. I want one topic for customers (I suppose) to NOT BE ACCESSIBLE to Google ... but this isn't possible because I have selected to allow search engine access. So basically I can't do what I want! Unless a robots.txt file can help...

Anne

|

Googlebot and your Nabble AppThere are two ways to prevent googlebot (and other bots) from crawling your pages:(1) You change the "View" permission so that only registered users or members can view your app. In other words, you force users to log in before accessing the contents of your app. (2) You go to "Options > Application > Extras & add-ons" and select the "Content / Hide contents from search engines" add-on. This would make your whole app unaccessible to search engines. If you want this just for a specific sub-app, then option #1 above is the right way to do it. Creating a robots.txt file(1) Click on the NAML link in the footer of your app(2) Click on the Options dropdown (gear icon) and select "Create new macro" (3) Enter the code below. The contents of the robots.txt file is defined by the last macro, which has some sample text available. You can always edit that macro when you want to change the contents of the robots.txt file.

<override_macro name="url mapper" requires="url_mapper">

<n.overridden/>

<n.map_robots/>

</override_macro>

<macro name="map_robots" requires="url_mapper">

<n.regex text="[n.path/]">

<pattern>

^/robots.txt$

</pattern>

<do>

<n.if.find>

<then>

<n.set_parameter name="macro" value="robots_txt" />

<n.exit/>

</then>

</n.if.find>

</do>

</n.regex>

</macro>

<macro name="robots_txt">

<n.text_response/>

Sample Sample Sample

</macro>

|

Re: robots.txt Not Found Erorr404

|

In reply to this post by mywaytoo

I think you forget the purpose of a search engine. It is to tell people about pages on the web that they can reach. If people have to login then, by definition, people can't reach them - so of course Google can't show them! You are asking the impossible! You'd better explain why you want people to be able to see the contents of the page when viewed at Google, but you don't want them to get to the page itself? Isn't that complete nonsense? Put that one topic in it's own sub-forum and put that behind a login screen or set the robots.txt not to grant access to that one topic/page. (EDIT: Oops! I see Hugo has already said this bit.)

Volunteer Helper - but recommending that users move off the platform!

Once the admin for GregHelp now deleted. |

|

Greg... I get on page 1 of Google SERPs for most searches that I've worked on... I know what Google likes!

There we go... I don't think I need worry about the forum helping in this regard. I would have liked to have been able to promote other aspects, but never mind, maybe I can do it from within my blog...

Anne

|

Re: robots.txt Not Found Erorr404

I didn't intend to challenge your knowledge of how Google will analyse a page. I just didn't understand why allowing Google to view a page could be acceptable when a human visitor wasn't. It didn't make sense to me. I was simply curious about the perceived benefit.

Volunteer Helper - but recommending that users move off the platform!

Once the admin for GregHelp now deleted. |

«

Return to Free Support

|

1 view|%1 views

| Free forum by Nabble | Edit this page |